ArcGIS Pro includes built in tools that allow end-to-end deep learning, all within the Arc interface – from training sample labelling, through model training and final image classification / object detection. This removes the need for coding and installing the correct versions of the required libraries for machine / deep learning in Python.

However, training deep learning models is an extremely processing-heavy task that requires the use of your GPU to even be slightly feasible in terms of processing time.

Although, Deep Learning in ArcGIS Pro is not possible straight out of the box. This post will walk through how to get everything set up, based on my experience with ArcGIS Pro 2.8/2.9 and the graphics cards listed below. Processes / software versions are sure to change in the future, so I will try and keep the instructions as general as possible.

Background

My experience with these tools has been for training object detection models to detect an arid shrub species (pearl bluebush) in drone imagery. I have trained (and detected objects using) using Faster R-CNN, YOLO v3 and Single Shot object detectors.

GPUs used

- Nvidia GeForce GTX 1070 (8 Gb memory)

- Nvidia GeForce RTX 2060 (6 Gb memory)

Prerequisites

- An Nvidia GPU that is compatible with CUDA

- Software listed in steps below

Resources

The two links below are ESRI blogs explaining deep learning in ArcGIS Pro (Part 1 focusses more on the set up). In hindsight the explanation in this link seems perfectly well detailed, although it took me over a week of struggle to actually get the GPU working on my machines. I’m sure my problem was an inability to slow down to read anything correctly, but I’ll give you my instructions anyway incase they sound any better.

- Deep Learning with ArcGIS Pro Tips & Tricks: Part 1

- Deep Learning with ArcGIS Pro Tips & Tricks: Part 2

The following instructions are assuming a version of ArcGIS pro is already installed – I started with 2.8 and upgraded with no problems to 2.9.

Step 1: Install Microsoft Visual Studio

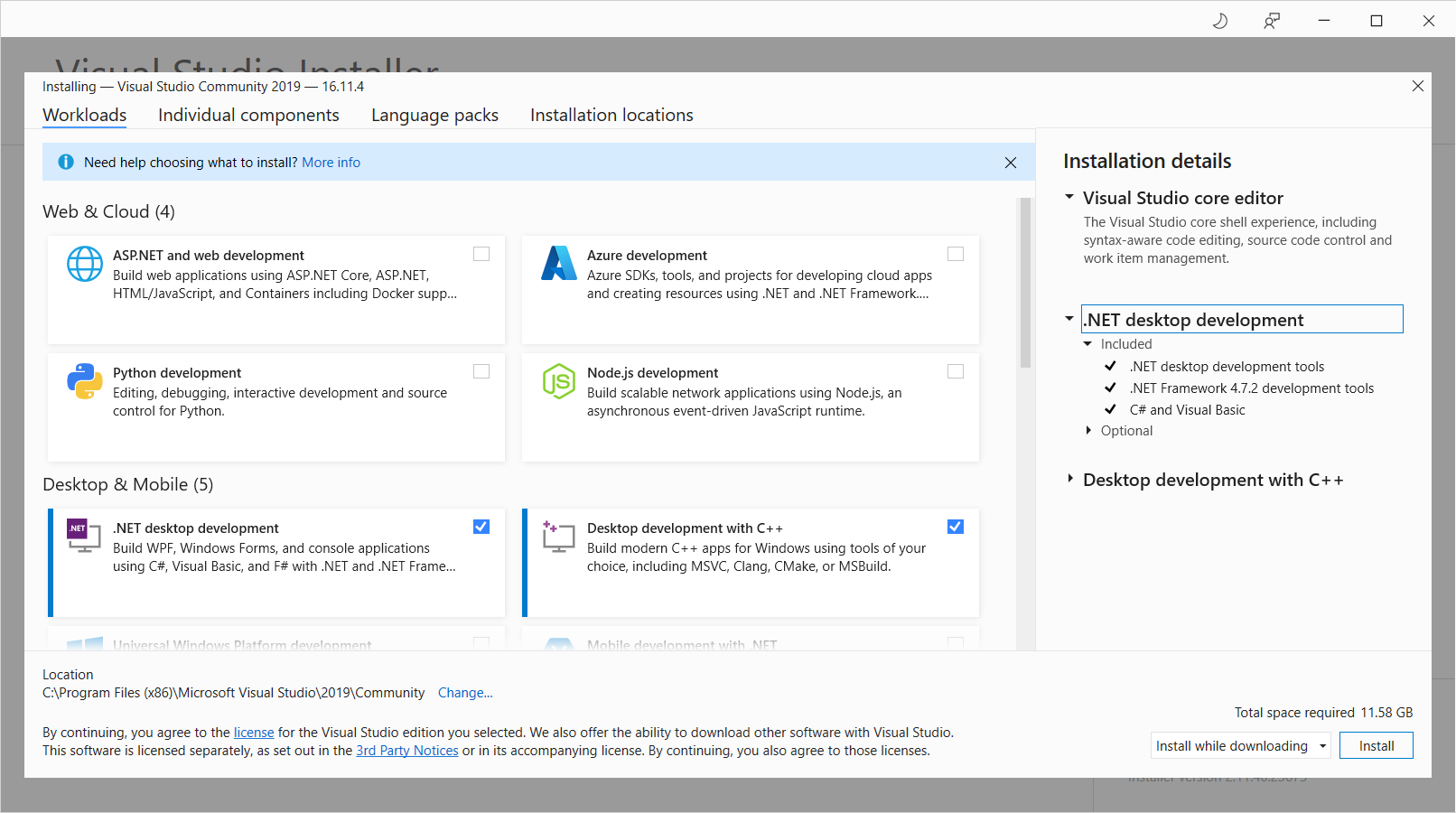

Download and install Microsoft visual studio. When installing, check the following options.

Step 2: Install Nvidia CUDA Toolkit

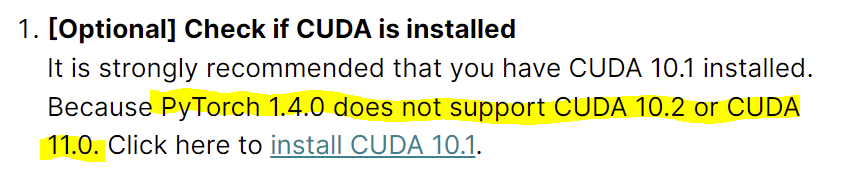

As of writing, download and install version 10.1, not the newest one that you will come to by default. Some of the deep learning libraries used by ArcGIS are not compatible with CUDA 11 (See image below).

Obviously this will change in the future, so this may be irrelevant, but if you’re having trouble then consider this.

See these instructions from NVIDA for more detail on installation if needed.

After installing CUDA Toolkit version 10.1 you may also want to check that your graphics driver is not out of date.

Note: 30-series graphics cards

Another problem to consider is if you have a 30-series Nvidia GPU you may NEED CUDA 11 – putting you out of luck. See GitHub: Update for RTX 30 Series GPUs (CUDA 11). Again, this may not be relevant at your time of reading.

Testing CUDA

To check your CUDA version (and verify installation), paste the following into a python window in ArcGIS Pro (Analysis > Python > Python window).

import torch torch.version.cuda

OR, the following in an administrator command prompt (may need to restart computer after installing CUDA Toolkit for command to work).

nvcc --version

Step 3: Install ArcGIS Pro deep learning libraries

Install the deep learning libraries for your version of ArcGIS Pro. Here is the version for 2.9, but you may want to search for the current version if you have updated past this.

Test CUDA

To test if CUDA is working with the Python libraries, paste the following into a python window in ArcGIS Pro (Analysis > Python > Python window).

import torch import fastai torch.cuda.is_available()

And this is all there is!! If all has gone well you should be able to start running deep learning tools in ArcGIS Pro using your GPU. Keep reading for some further tips on how to make everything run smoothly.

Running tools using GPU

In the environment settings:

- Set the processor type to GPU

- Clear the parallel processing factor to avoid any conflicts (this is a CPU setting)

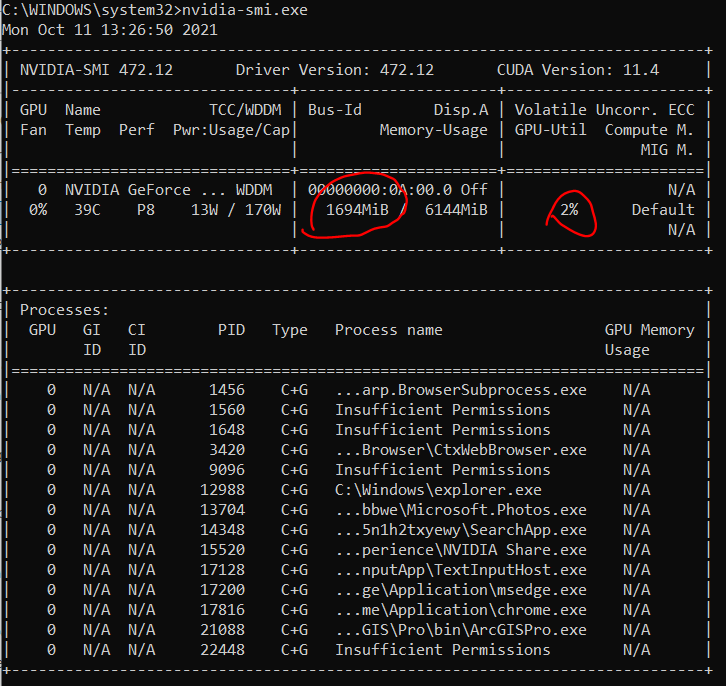

The main problem when running deep learning tools is running out of your dedicated GPU memory – the tool will crash. Your GPU usage is best monitored through an administrator command prompt using nvidia-smi.exe. Overall GPU usage may not display correctly in task manager.

Type the following into command prompt to run nvidia-smi.exe on a 3 second loop

nvidia-smi.exe -l 3

This will give you the following output.

While trying running a deep learning tool on GPU check the memory usage (left hand circle in above image) as well as the GPU utilisation (right-hand circle) .

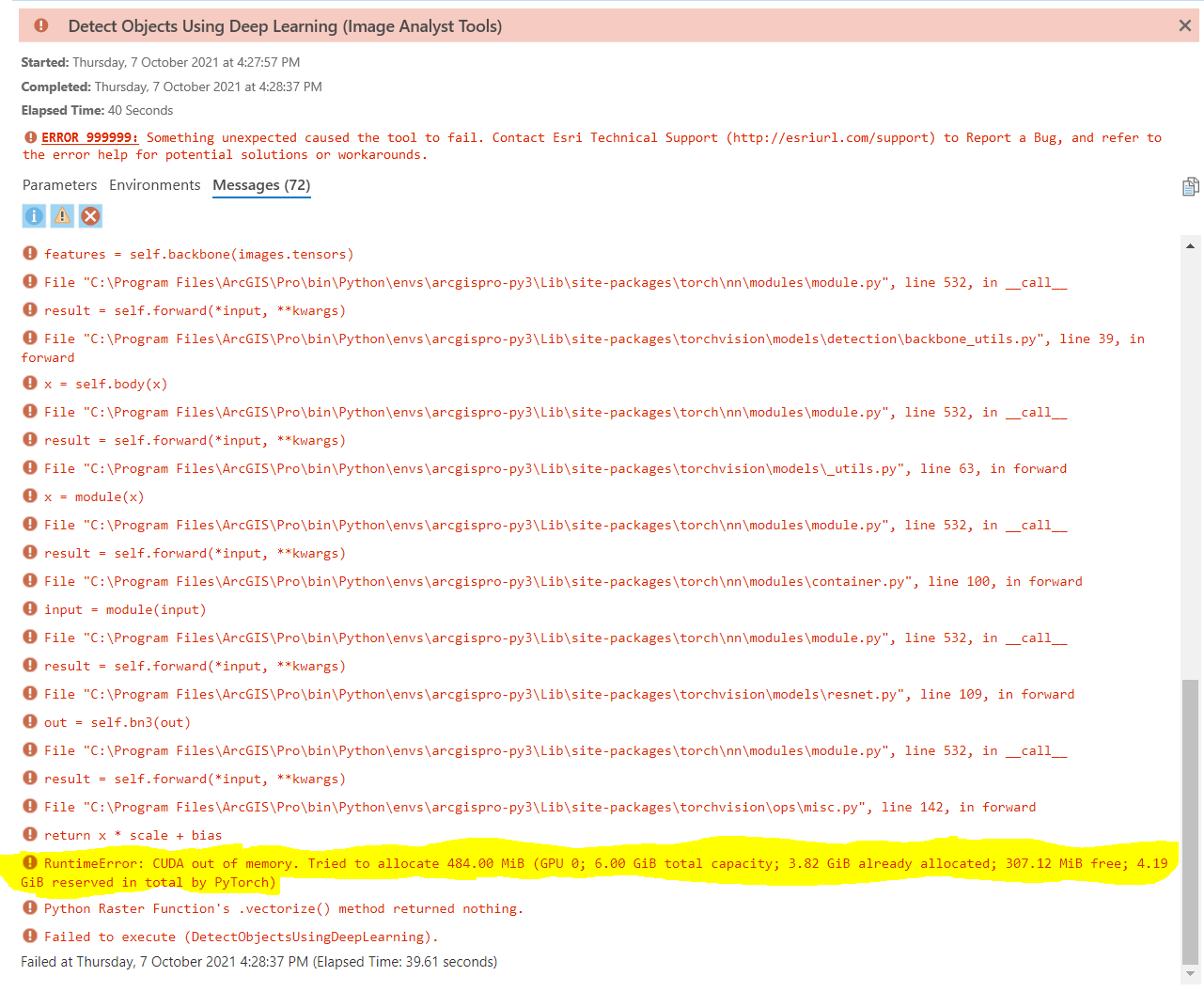

First, check that the GPU is actually being used. Next, monitor the memory usage – if the memory nears the maximum and the tool crashes then lower the batch size. You will probably also have a CUDA out of memory error in the tool messages (As in screenshot below). I was typically using a batch size of 16, although sometimes had to drop down to 8 when using the GPU with less memory. However, the batch size you will be able to use will vary depending on the parameters you select in model training, such as the model type and CNN architecture (backbone) as well as your GPU memory. I used a batch size up to 128 in some scenarios – you just need to try for yourself. If the tool doesn’t crash and you appear to have GPU memory / overall capacity still available then you could try increasing the batch size to speed up the process.

Hopefully this post has been of use to you and reduces your painful week of troubleshooting down to a couple of days at worst!

Leave a Reply